Why aren't All Songs Recorded at the Same Loudness and What is Wrong With Music Nowadays?

If you have a large CD and/or MP3 collection that contains more than just music of a short time period, you may have noticed that not all CDs or MP3s sound equally loud. The differences can be quite large, sometimes you really have to crank the volume and at other times the first few notes of a new song or album may cause you to jump in the air and reach for the volume knob as fast as possible. You may also have noticed that you mostly have to turn down the volume with more recent music and turn it up with older music. Or, you may just have a feeling that recent music rubs you the wrong way but you can't pinpoint the reason. Why is this?

I wrote this article around the end of 2008, but I occasionally update it if I discover new interesting facts, so you may want to check back from time to time. I plan to add some amplitude histograms if I have the time.

Dynamic Range

The maximum amplitude of recorded sound waves is limited, either by the physical properties of the recording medium or by the digital representation. Digital audio is most commonly represented as 16-bit numbers, and these numbers can only represent a limited set of values. So you might be thinking that louder songs just go higher in these numbers, but this is incorrect: every well-mastered song will use (almost) the entire range that can be represented by 16-bit numbers. The actual reason why two recordings that employ the same range of values on the same medium can differ in perceived loudness, will be explained further on.

The dynamic range of a recording medium is defined as the highest amplitude it can represent, divided by the smallest amplitude it can (reliably) represent. For 16-bit digital audio (such as the CD or decoded MP3 audio) this is 96dB. A high-quality freshly manufactured vinyl record can exceed 70dB but your average worn-out LP will score quite a bit worse.

Human hearing ranges from 0dB(A) to 120dB(A), but anything above 100dB(A) is to be avoided because it will cause permanent hearing damage. In practice, any realistic ‘silent’ environment will have a background noise level of about 30dB(A). This explains why the 70dB of vinyl records was adequate. All the CD did was increase the margin, cater for perfectly silent environments, and of course avoid the gradual degradation of an analog medium. Mind that I am mixing dB(A) and dB here, which is not exact science. Yet, even when taking large error margins into account for this, it is obvious that the 96dB dynamic range of 16-bit audio is plenty for consumer audio.

Similarly, the dynamic range of a piece of music can also be defined. Evidently it cannot exceed the dynamic range of the medium it was recorded on. It is however far less obvious to provide a strict definition of the dynamic range of a recording than for a recording medium. Roughly it is also the loudness of the loudest peaks in the song, divided by the loudness of the most silent parts. This definition is too rough though, because it would assign maximal dynamic range to a recording of a non-stop car horn at maximum amplitude with only one second of pure silence somewhere in the middle. Anyone would agree that this has virtually no range at all. It makes more sense to chop up the song into short fixed-length parts and average out the dynamic range measured over each part. Or better, to obtain statistics sampled densely across the entire recording and somehow derive a single dynamic range figure from that. However, I know of no real standard method for this.

Dynamic Range Compression

It is perfectly possible to record two versions of the same song that both use the entire range of 16-bit digital audio, but with one sounding much louder than the other. The trick is to use dynamic range compression(1). If all silent parts in a song are boosted up to a higher level, the song will sound louder on average. The catch is that by reducing the difference between the lowest and highest amplitudes, the song loses part or all of its variations in volume. Its dynamic range will be effectively reduced. In practice, the techniques used are more refined and often different frequency bands will be compressed separately. The latter is called “multi-band compression”.

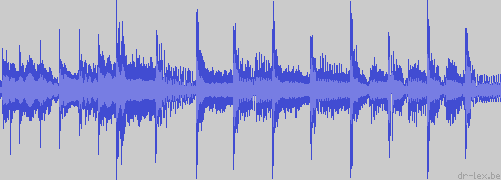

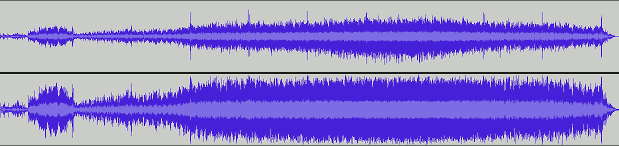

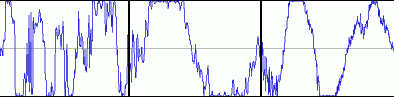

The following figures illustrate dynamic range compression. I took part of the intro of ‘Money for Nothing’ from Dire Straits from 1985, one of the first songs to demonstrate how much more dynamic range the CD offered compared to records and tapes. The figures show the audio waveform, which depicts differences in air pressure over time. The centre line corresponds to silence and the top and bottom of the image correspond to the most positive, respectively negative change in sound pressure that can be represented. This waveform is the kind of information stored on a CD or in an MP3 file.

As you can see, the waveform covers the entire range and has sharp peaks corresponding to the snappy drums, hi-hats and cymbals. Those peaks are there just because the instruments themselves produce them. By faithfully reproducing the peaks, the recording sounds ‘live’ as if the musicians are sitting where your loudspeakers are. Now, the only way to make this sound louder without touching the volume knob on the amplifier hooked up to the CD player/iPod/whatever, is to alter the waveform in the following way:

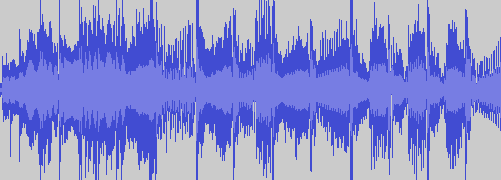

What I did here is use a fairly aggressive compressor on the sound waves, considerably boosting each silent part whenever it goes below a threshold for more than a few milliseconds. The waveform still occupies the entire range, but spends more time near the maximum values, hence on average it will sound much louder. Now, suppose I had already tuned my amplifier's volume knob such that the first waveform shown above corresponds to an agreeable loudness. My new inflated sound wave will sound way too loud on the same setting, hence I turn down the knob until it sounds more or less as loud as the original. This proved to correspond to a difference of about 8dB:

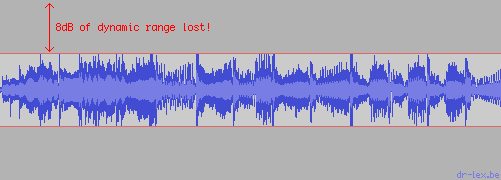

As you can see, the waveform looks similar to the first one, with the big difference that all sharp peaks have been obliterated. They are gone and there is no way to get them back without knowing what the original waveform actually looked like. The area outside the two red lines in this image cannot be used anymore, because the area in between them now corresponds to the full 16-bit amplitude range. In audio mastering terms, we say that the compressed version of the track has less headroom than its original that could still use this extra amplitude range.

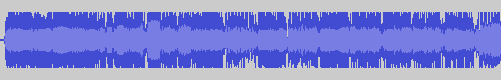

Compressing a song is akin to putting a car into a car crusher. Restoring the dynamic range is like trying to reconstruct the original car from that crushed lump of steel. Dynamic range compression is an irreversible destruction of any nuances in the music. It is easy to go from the uncompressed waveform to the compressed one, but the inverse is extremely hard if not impossible(2). The compressed version sounds similar to the original but it lacks the punch and liveliness because everything has been brought to the same amplitude. It is like taking the score for a classical composition and erasing all dynamic indications from ‘pianissimo’ to ‘fortissimo’. Mind that the above was still a reasonable example. If this song had been recorded in 2010 and Dire Straits would be as hip as Lady Gaga, the producers would probably have done everything to make every sound-wave splatter against the most extreme levels. In other words, the waveform could look like this:

Yes, this is still the same piece of music — at least what's left of it. I have some actual MP3s that look like this and they sound like s**t. This illustrates that aggressive dynamic range compression squeezes all the punch and life out of a song, turning it into a continuous car horn that only changes its tone. It makes the music less interesting and more fatiguing to listen to. Heavily compressed music often sounds as if it is choking and gasping for breath when all the other instruments are momentarily muted by a loud drum sound. You can either turn up the volume and then the song parts that should have been silent will sound too loud, or you can turn down the volume and the parts that should have been loud will be too silent.

In all these examples the compressor was configured to maintain the shape of the sound waves. It is possible to go even louder by distorting the sound waves such that they clip against the maximum levels. So you may be wondering, why would anyone do such thing? Certainly no professional sound producer would even think of applying such an insane degree of compression, right?

Wrong.

There is one appealing aspect to dynamic range compression: actually the only ‘advantage’, as illustrated above, is that compressed music sounds louder if one plays it after a recording with a less aggressive compression without touching the volume knob. Of course, a DJ or electronic limiter on a radio station, or anyone listening on an iPod or Hi-fi installation will just turn down the volume if your song sounds louder than someone else's. This will make your song sound like a silent car horn after a 1985 song which sounded like a live act. So actually there is almost no point in aggressively compressing your music except to make it sound boring. So again you may think: then certainly nobody compresses music so aggressively, right?

Wrong.

The Loudness Wars

Dynamic range compression made sense in the days of low-quality vinyl records and cheap cassettes, because those had such poor dynamic range themselves that the music constantly needed to be at a high volume to mask the background noise. When the CD became popular, most new albums (like “Brothers In Arms” from Dire Straits) embraced its huge 96dB (theoretical) dynamic range. Of course, a certain degree of compression remained necessary to get a balanced overall sound, but during about a decade music kept on being mastered with an awesome dynamic range and all was good.

The dynamic range of recordings however has started declining steadily since the year 1990, and at the time of this writing some albums are at what I consider the limit of dynamic range compression. “By the Way” from the Red Hot Chili Peppers is an example: I tried if I could squeeze out some more dynamic range out of the song “By the Way” with the most aggressive compressor possible, but I could only remove an additional 1dB, which is barely perceivable. If one would go any further, music will simply start to sound awful.

The increase of perceived loudness at the cost of declining dynamic range is often referred to as the “Loudness Wars”. Most producers seem to assume that nobody knows what a volume knob is and don't want their album to sound less ‘loud’ than the competition when played on a fixed-volume system, so they will compress it either just as much or more. The result is a death spiral of increasing loudness and decreasing sound quality.

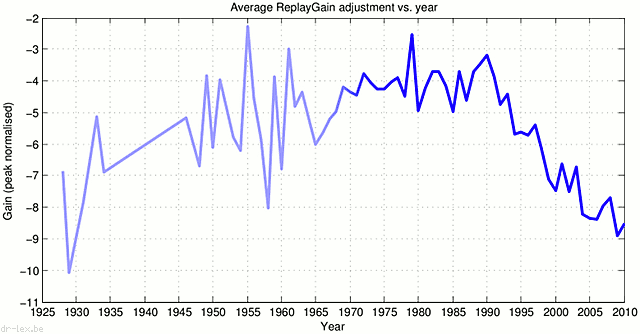

I have been able to measure the effect of the Loudness Wars on my own music collection. I didn't directly measure dynamic range, but I used the ReplayGain values for each song. In short, ReplayGain (RG) computes an ‘ideal’ volume adjustment to make each song sound equally loud at a certain reference level. It's similar to the ‘Sound Check’ feature built into iTunes, but more refined (it still is not perfect though). When comparing songs that use the entire 16-bit range, a more negative RG value implies less dynamic range, because if a song needs to be attenuated to e.g. −10dB, it can never attain any amplitudes above −10dB (cf. the compression example above). A song with a RG value of 0dB (no change) on the other hand has 10dB of extra headroom to produce interesting volume variations. In other words, when all songs have been normalised such that their waveforms span the entire 16-bit range, their ReplayGain value is a good indication of their dynamic range (up to some fixed offset). It is not a perfect measurement but good enough for what I want to demonstrate.

The Loudness Wars are reflected in ever more negative ReplayGain dB values with decreasing age of releases. The graph below shows the average peak-normalised RG gain per release year for a collection of about 4000 songs. The songs aren't uniformly distributed, but most are from the period 1990-2005, which is the period we're interested in.

To give a frame of reference, I did some tests with ReplayGain. A 440Hz peak-to-peak square wave, which is about the loudest possible ‘sensible’ sound, causes a RG value of −18dB. Many current songs are at −12dB, so there's only 6dB distance between current ‘music’ and a car horn. (It is possible to go even louder, the limit seems to be a 3700Hz square wave which will push ReplayGain down to −24dB.) The worst song in my collection is ‘Parallel Universe’ (you can guess the artist) at −14.1dB. Only 3.9 dB distance! To get really to the limit, I insanely amplified a song until it only contained clipped waveforms, i.e. amplitudes of −1 and 1. The result has a RG gain of −19dB and is painful to listen to even at low volume(3). Hence, if the above graph would continue its downward trend, music in the year 2020 would have degenerated to this kind of awful noise.

Shellac Records and Car Horns

One could be tempted to conclude from the above graph that the dynamic range of songs in 2009 has dropped back to the same level as of hissing and crackling shellac records around 1929. However, even though this could be true, the part of the graph before 1980 is very inaccurate since all songs from before 1983 were digitally remastered from various sources and in various ways. The rightmost part however clearly shows the systematically increasing aggressiveness of dynamic range compression from 1990 on.

It is even so bad that if you buy a new compilation of old music, the tracks on that compilation are likely to have been compressed too and stripped of their original dynamic range. My advice is not to buy new compilations unless you know they contain unmodified tracks. Buy the original albums instead, if you can still get hold of them. The following image shows an example of this: the upper half is the waveform for the original “Michael Woods Ambient Mix” of “Café del Mar” by ‘Energy 52’ from 2002. The lower part shows a copy taken from a compilation from 2006. According to ReplayGain, the latter is 5.5dB louder. It is obvious that the waveform had to be distorted to achieve this(4).

It does not end there: often, remasterings of popular albums are released. The goal of a remaster should be to make a better quality version of the exact same album by using more modern equipment than was available at the time when the original album was released. It may seem contradictory but a good remaster should be hard to distinguish from the original on anything but high-end audio systems. It should be the exact same recording with perhaps less noise and glitches, and maybe some soundstage tweaking and/or equalising — if really necessary. In other words, less distortion. However, nowadays there is a considerable risk that the person responsible for the remaster will succumb to the trendy temptation of making it sound ‘louder’. This will make the remaster actually contain more distortion than the original and it will be more suited for pressing on a vinyl record or recording on a cassette. I guess records and cassettes are the next big thing in consumer audio equipment, and will soon replace the outdated CD and MP3 players. Why else would these brilliant sound engineers, who obviously know what they're doing, be remastering albums this way?

Of course I am being sarcastic here. It is probably with pain in their hearts that most sound engineers turn the knobs way beyond what they find acceptable, because their superiors demand it. They have spent a lot of creativity and sweet time on making that greatly improved master and then someone says: OK, now run it over with a steamroller or you're fired.

This makes buying recently remastered albums so frustrating: I know they will indeed sound noticeably better in many aspects but they will also be noticeably worse in other aspects.

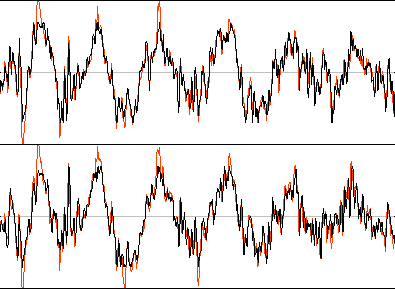

You can see an example below: it is a fragment from the album “Industrial Silence” by Madrugada. The stereo waveform from the original 1999 album is shown in red, with the waveform of the 2010 remaster overlaid in black, after adjusting the volume for equal loudness.

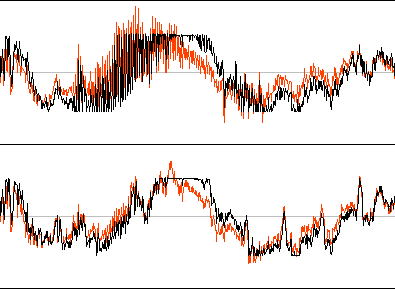

In this case the difference is not very large because the loudness wars were already in full effect in 1999. What happens when the original album was older? Well, if you buy a remaster of an eighties album nowadays, you can be pretty certain that it too has been trimmed with the dynamic range lawnmower in an attempt to make it more competitive with current hit chart drivel on the ‘unadjusted volume’ front. The following image shows the same kind of overlay for a 25 millisecond fragment out of Michael Jackson's “Off the Wall” album. The black waveform is from the 2009 remaster, which is about 5dB louder. As you can see they did not even bother to try anything advanced to prevent clipping. The waveform is simply amplified and brick-wall clipped.

I have seen this on other albums as well. My guess is that they take the old master and amplify it such that say 1% of all samples are crushed against the brick wall of the digital extremes. I have also seen cases where they tried to be smart by first smashing the waveforms this way and then slightly reducing the amplitude again, to make the album stay under the radar of simple clipping detectors. Of course in practice this makes no difference. If you're lucky, those 1% of samples will all be very sharp peaks and the distortion caused by clipping them will not be audible. If you are less lucky, those samples will be grouped and their clipping will result in an audible crackle that cannot be removed by any tweaking of your sound system. Unlike analog media which have a kind of graceful degradation at their extreme levels, digital clipping is instant and hard, and the resulting distortion is therefore maximally unpleasant.

Squeezing Bits

Really, I do not understand why people believe old albums can be improved by destroying their original dynamic range. It baffles me how producers can still believe in the flawed ideas behind the whole loudness wars but this butchering of ‘classic’ albums puzzles me the most. Why would they need to sound louder? The young target group for loud albums does not care about those old albums anyway, they want to hear the latest new stuff. Really the only advantage in terms of remastering quality is that if overall amplitude can be boosted by 6dB — which is about the maximum if the music is not to be maimed too obviously — one bit of digital resolution can be gained. Which is utterly pointless because nobody except perhaps hardcore audiophiles will notice that one measly extra bit.

There is no doubt that aggressive dynamic range compression (DRC) is useful in certain situations. The main question of this whole article is: why don't we just put the DRC in each playback device and make it optional, such that consumers can choose to sacrifice dynamics for signal-to-noise ratio whenever they are in those certain situations? Put otherwise, is there any benefit from remastering albums and steamrollering all dynamics for the sake of ensuring 16-bit resolution at any moment in time? Let's start with those specific situations where DRC is desired. I can only think of three:

- The consumer wants to listen to music in an environment that has so much background noise that the silent parts are drowned out.

- The consumer has busted their own ears and has constant tinnitus that is equivalent to constant background noise that drowns out silent parts.

- The consumer wants to turn down the volume such as not to disturb other people nearby, but still wants to hear all parts of the music.

Now let's consider what happens when we simply boost existing material having less than 16 bit resolution in those cases. The first two cases are equivalent. Essential about them is that there is a lot of background noise, the whole reason why the listener wants to boost the silent parts. Assume the background noise is at 60dB(A) and a part that only stretches a 13-bit range is being boosted to 16-bit range by a compressor in the playback device. This would amplify the quantisation noise as well, by 18dB. Will this matter? No! If the quantisation noise was unnoticeable at a normal background level of 30dB(A), then even after amplification it will still be completely masked by 60dB(A) of noise. In the third case of turning down the volume, the DRC will in fact only affect the loud parts there, and leave the silent parts untouched. Adding more resolution to the silent parts is futile. In other words, the argument of squeezing more bits of resolution out of the limited 16-bit range is bogus. The DRC belongs in the playback device, not on the album itself.

The majority of people listen to their music through an MP3 player nowadays. As soon as music is encoded to a lossy format like MP3 or AAC, those few bits of extra resolution get totally drowned in quantisation noise. The whole point of these formats is to throw away most of the resolution in a way that has minimal audible impact. The fact that music can apparently be represented adequately with on average as little as three bits per sample, is another indication that nobody will notice a boost of a few bits. Only in very silent parts the reduction in noise floor level may be noticeable, but there will be no silent parts because destroying them is the whole point of the loudness wars anyway! The loss of dynamics and added distortion will remain noticeable though. Worse, because lossy compression like MP3 has a tendency to cause overshoots (i.e. it makes sound waves extend further in amplitude than the unencoded waveform), there is a risk of additional clipping and therefore distortion. This is also why I am somewhat wary of services like ‘iTunes Match’. It will likely use recent remasters for the ‘matched’ songs, so don't be surprised if songs sound differently between your mobile device and your main computer.

In the first decade after the CD became mainstream it was heavily frowned upon if an album contained more than just a tiny fraction of samples near the most extreme digital values. Even a single sample at those extremes (i.e. zero or 65535 for 16-bit samples) was considered a recording flaw. There were even electronic devices that one could hook up to a CD player, which alerted when significant ‘clipping’ was detected. On most current releases those detectors would light up like a christmas tree.

The image below shows three waveform fragments from the song “Dani California” by the Red Hot Chili Peppers, and I really didn't need to search long to find them, the track is full of clipping sound-waves. Such extreme degree of clipping becomes clearly audible as a kind of crackling noise. Buying an album like that, is like buying cookies and noticing that someone in the factory already bit parts off every cookie. Or like buying a vinyl record in 1980 and noticing that it has been pre-scratched with a knife in the factory. Or in my car example, buying a new car that has already been crushed. All good recording practices have been thrown out of the window just to get those few extra deciBels on the audio systems of people too lazy to adjust their volume knob.

Racket

Of course, there must be some reason why music is systematically being destroyed nowadays, and there is. I believe the whole drive behind the Loudness Wars is the flawed idea that “louder is better.” When you're going to buy a sound system in a store and the salespersons ‘helping you out’ know what they are doing, they will play music slightly louder on the system they want to sell you (i.e. probably the most expensive one). Even if a system produces a slightly poorer sound, it will still sound “better” to an untrained listener if it plays louder and the listener is not given enough time to detect any shortcomings. The music industry has picked up on this and has applied it to albums, assuming that people will do a cursory comparison on an identical sound system when deciding which album to buy.

In the absence of loudness compensation the “hot” music, as it is often called in the industry, does appear to sound ‘better’ the first few seconds you hear it. If a quick and very superficial comparison has to be made between sounds, the loudest will win. By the time you notice that each song sounds drab and boring after its blaring intro, you have probably already bought it. Moreover, if an album is mastered louder, it is possible to get more loudness out of the same audio system, if distortion is ignored. Hence teenagers who mostly don't care if stuff starts clipping and crackling, can get more racket out of their cheap audio systems, iPods and tinny cell phone speakers and destroy their hearing more effectively.

In this respect, the loudness restrictions for MP3 players imposed by the European Union and other instances are not helpful, in fact they only contribute to the loudness wars. Although those restrictions specify a maximum deciBel value, manufacturers won't do the effort to build an accurate loudness meter into their devices. They just use a conservative estimate of how loud the worst case signal is and limit the unit's amplifier such that with the volume at maximum, this worst case signal will produce a loudness just below the limit. Of course, properly mastered music is much more silent than this worst case signal for the simple reason that the worst case signal is awful noise. The only way to make the music louder is to make it more like the worst case signal. The whole concept of these restrictions is completely flawed anyway even if the MP3 player would accurately measure the loudness of the output signal. The loudness depends heavily on the kind of head- or earphones that are plugged in, and one can always go louder with an extra headphone amplifier.

Of course, the trend towards boring music which constantly has the same volume fits within our disposable society: it may seem great that people will get bored of a song after a few listens so they will want to find new songs. A nasty side effect the record companies do not want to understand is that people do not want to pay much — if anything at all — for music anymore because they know that the new songs will be just as boring and annoying after a few listens. I had no problem shelving the regular CD price for ‘Brothers in Arms’ even 20 years after its release, but I am very hesitant to buy a modern album even from the bargain bin because I might hate it after listening to it only twice. I have long given up on most mainstream music anyway because it all sounds the same, and that's not just because it all has zero dynamic range. Everyone is trying to throw all the same success formulas of the past few decades together, with often an overdose of the horrible ‘AutoTune®’ sauce on top to mask the incompetence of the singer or just to be trendy. All songs go through exactly the same mastering pipeline, meticulously constructed by people who believe it is the ultimate way to produce music. What comes out of that pipeline is a constipated slurry of non-stop ‘perfection’. It is all so clean, polished, and similar that it becomes utterly boring very fast, because there is no offset to make that perfection stand out. Maybe Michael Jackson, the King of Pop, didn't die because of wrong medication but because of the death of Pop itself.

The Consumer is a Criminal

The RIAA and friends often distribute preposterous reports in which massive losses due to piracy are claimed. The way in which those losses are calculated is naïve and simplistic: an estimate for the total number of pirated songs is multiplied by the profits that an average song produces when sold through an RIAA-approved channel. That's not how it works, folks. Of all copied songs, only a very tiny fraction would have actually been sold legally if it were impossible to copy music, because an awful lot of people just copy the song because they can, not because they really want it.

Moreover, many people learn to know good music through pirated copies. At some point they either want a better-quality copy and/or decide that the artists deserve to be paid and they buy the album anyway. Chances are that for the next albums of their newfound favourite band they'll skip the effort of pirating and buy them right away. Those are all profits that would not exist without piracy. By making music more disposable however, the risk increases that people will hate the music before coming to the stage of wanting to buy a legal copy. So the ever increasing destruction of dynamic range is yet another typical back-firing Music Industry practice, like the ridiculous copy protection ideas that almost killed the CD.

When I said skip the effort of pirating

I assumed that it is easier to obtain and use a legal copy than a pirated one, which is another important fact that the music and movie industries often overlook. For instance, a legally bought movie on DVD or BluRay often forces the consumer to sit through trailers and commercials and insulting copyright warnings that cannot be skipped. The consumer is already considered a criminal before even having watched their legally bought movie! That's one heck of a way to lower the threshold towards actual criminality. A big fat self-fulfilling prophecy waiting to happen. Likewise, DRM on music downloads is transparent at best, but in reality it is often an added hassle. By polluting music with DRM, one increases the inclination for people to obtain a DRM-free pirated copy.

Bringing Music Back to Life

Is there any way to encourage record companies to go back to making enjoyable music? Maybe. If it would become mandatory to apply something like ReplayGain or Sound Check to each recording as a final mastering step or if each and every audio playback device would be equipped with a similar automatic loudness adjustment, there will be no point at all to over-compress the music. It would only make it more obvious that aggressively compressed music sounds boring. Another enormous advantage would of course be that nobody will have to fiddle with the volume knob anymore between albums or songs. Please stop destroying music and start investing in research for such systems.

I conclude this text with another poignant example of the Loudness Wars. I'm kind of a fan of the band Mogwai. They pioneered a unique style of music in which silent parts alternate with or gradually build up to a wall of sound of roaring electric guitars. Here are the average ReplayGain values for each of their albums I have:

- Young Team (1997): −2.00dB

- Happy Songs for Happy People (2003): −7.54dB

- Mr. Beast (2006): −10.68 dB

- The Hawk is Howling (2008): −6.37 dB

As you can see, in nine years Mogwai lost a whopping 8.7dB of dynamic range. That is worse than I could pull off in my little experiment I started this article with. For a band whose style basically is dynamic range, that's just terrible. If any of you Mogwai guys read this, please punch your producer in the face. Unsurprisingly, ‘Mr. Beast’ is my least favourite album of theirs. Luckily, as their latest album shows — which I liked almost as much as ‘Young Team’ — they seem to have seen the error of their ways and went back in the right direction, somewhat. Apparently in 2008 they also re-mastered ‘Young Team’ and made it ‘sound louder’, and we all know what that means. I'm not interested in that re-master: if I want my original copy to sound louder I'll just crank the volume (as always).

Dynamic range compression is not all evil. A certain reasonable degree of compression will always be necessary to produce a properly mastered album. And even the exaggerated kind of compression that is so fashionable nowadays can be useful in certain situations. For instance when listening to your iPod in a noisy environment, or in a car where the constant rumble of the tyres and engine drowns out silent sounds. Next to the silly “louder is better” idea, the fact that many people now consume music in such poorly conditioned situations has probably also motivated the guys behind the knobs in the recording studio to constantly max out the amplitude. Music devoid of any dynamic range is also more suited as background music, for which being uninteresting is actually a quality.

Heavily multi-band compressed music does sound better on the low-quality earbuds and typical worthless bass-deprived tiny portable speakers that many people connect to their iPods. This does not mean however that people with a perfect Hi-Fi system at home or noise-cancelling headphones should be denied the dynamic range when they want to enjoy a good album. Dynamic range compression can improve the listening experience on less-than-ideal devices, but it will limit the experience on high-end devices to something lower than the system could theoretically render. The overproduced music that roams the charts today is annoying to listen to on a good system or with good headphones. Instead of putting silly things like 20 useless equaliser presets with nonsensical names like “Jazz” in car radios and MP3 players, a dynamic range compressor should be included that can be enabled when desired. Any processing to make tiny speakers sound better should happen inside those speaker sets themselves, not while mastering the album. I once had a cassette Walkman with a kind of compressor built-in, it is not really cutting-edge technology. Remember, it is easy to go from uncompressed to compressed music but the inverse is near impossible. Therefore it makes much more sense to master albums with the minimum required amount of compression, and allow the consumer to apply additional compression in their playback devices when desired. This also opens up a market for compression algorithms that can be licensed on a per-device-sold basis, which will be much more profitable than charging outrageous prices for those few units that can be sold to recording studios.

Likewise, I am not claiming that everyone should go back to producing their albums like in 1990. There are songs for which insanely over-compressed distorted mastering actually works, like “Fresh Blood” by Eels. That song scores a whopping −12.2dB ReplayGain value and its amplitude histogram is almost the same as of pure noise. It just fits the style of that song, it would not be the same with clean mastering. However, it does not make any sense to apply this same style to all but the hardest rocking Red Hot Chili Peppers tracks or music like Mogwai's, and certainly not to ambient music.

From visitor statistics I can see that many people who reach this page search for things in internet search engines like why some music sounds better on cheap speakers,

nowadays music is just noise,

music sounds louder now,

overproduced music sounds the same,

how to find pre loudness war cds,

over compressed music sounds worse as mp3,

and one of my favourites: why do the red hot chili peppers songs crackle.

It is funny to see this, and encouraging. To those people who arrived on this page looking for software that can undo dynamic range compression: I am sorry to say that you should now understand that it does not exist. Even if anyone would try to make it, it will not work well no matter what. And for the albums that were mastered so poorly that they clip and crackle, there is no hope at all. Any method that would attempt to repair the clipping samples will also degrade other parts of the music. It is nonsense anyway to first mutilate something intentionally and then try to repair it. The only proper solution is to correctly master the album, period.

The article “What Happened to Dynamic Range” explains all of this in greater detail, this is a highly recommended read. Unfortunately the original website has somehow been taken over by someone promoting Japanese foot cream, so here is a link to the Internet Archive copy of the article.

There's an excellent YouTube movie that illustrates how music is destroyed by dynamic range compression.

Also take a look at the Turn Me Up! website.

If you're still convinced that over-amplifying your albums to the point that the waveforms start clipping will give you an advantage over properly mastered albums on radio broadcasts, you may want to read this.

And last but not least, you may be interested in reading what an actual sound mastering engineer has to say about the subject, or listening to the opinion of some famous producers.

October 2020 update: I have the impression that dynamic range has made a comeback in the last decade. Apparently people did become tired of over-compressed music. This doesn't mean it won't again rear its ugly head however, so the message I have is to remain vigilant.

(1): Dynamic range compression is not to be confused with ‘lossy compression’ as is used by digital audio codecs like MP3, AAC, and OGG, which is a completely different thing. Dynamic range compression works on the amplitude of sound waves and alters the sound considerably. Lossy compression works on the digital data that represents the music, and only slightly alters the sound waves to allow a digital representation that requires less storage space. Ideally those small alterations are not noticeable to the listener.

(2): I actually tried undoing compression on the Doves song ‘The Outsiders’. My strategy was to do the inverse of a multi-band compressor, by splitting up the song into multiple frequency bands and trying to reconstruct realistic dynamics in each band. The latter is pure manual and wet-finger work. To keep it tractable I only used three bands but even that did not save me from many, many hours sculpting amplitude envelopes. Parts of the song did improve a lot, but the inevitable result was still that it sounds like someone is fiddling with the volume knob because three frequency bands is not enough. I would need to use more bands to get a better result, and spend even more time on it. No matter what I would try, the result will always be much worse than if the song would have been properly produced from the start. Nevertheless, the original version now sounds even more flaccid to me because I got used to the extra dynamics.

(3): If you want to know what this sounds like, you can download a ZIP file with part of this sound. I reduced the volume to a normal level to avoid that you would blow up your speakers and ears. If you do believe this sounds awesome, put it on repeat and play it for five minutes. Then play some normal music and see what sounds better.

(4): You can download a sound fragment to hear how the music was massacred. It contains a fragment of the original version, followed by the same fragment from the 2006 compilation. Of course I normalised the volumes such that they sound equally loud. Even on tinny laptop speakers you will notice that the 2006 version sounds jittery and distorted, especially around the ‘beat’.