Why ‘3D’ Will Fail… Again

Thanks to James Cameron's ‘Avatar,’ the year 2009 heralded a new kick-start of the age-old concept of stereo cinematography, or as the average person calls it: “3D.” Film studios touted ‘3D’ as being revolutionary and new, but it isn't. In fact, stereo photography and cinematography are almost as old as photography itself. You will find some astonishingly old examples of this on Wikipedia's article on Stereoscopy. With ‘old,’ I mean 19th century stuff. Older people may also remember going to 3D movies in their youth, with as only difference that the technology was less advanced. There have been numerous ‘3D’ hypes in the past and they have all died out and if you ask me, so will the current one. As a matter of fact, we're now more than 10 years after I initially wrote this article, and my prediction was correct. Why?

The major problem with ‘3D’, or stereoscopy, is that it does not bring an awful lot of extra compared to plain two-dimensional photography or cinema. On the other hand, it requires a considerable extra cost, both for creating the stereoscopic material and for viewing it. Little reward for a big cost is a simple equation that spells doom for the future of 3D cinema.

Make no mistake: there will be another 3D hype. By the time everyone has forgotten how unrewarding stereoscopic cinema is, the industry will try it again, and it will fail again unless someone finds a good solution for the fundamental problems I describe below.

What is ‘3D’ or stereoscopy really?

For someone like me who has made his master thesis about rendering virtual 3D views out of a set of photographs and who has a PhD related to computer vision, it is pretty obvious what ‘3D’ means. But for the average consumer it is often nothing but a trendy and cool sounding code word. This is one of the reasons why they cannot even begin to see what the problems are with the current ‘3D’ hype.

In general, ‘3D’ means there are three dimensions. A photograph is 2D because it has only two dimensions. It is flat and each point on its surface can be described by two numbers, e.g., the distance x from the left edge and the distance y from the bottom edge (Figure 1). In technical terms those distances are called coordinates. The physical world we live in is 3D because three coordinates are required to describe a point in a volume.

Many living creatures have two eyes (some even more), because next to providing a back-up in case one eye fails, this allows to see in ‘3D.’ Each eye sees a 2D image because the eye's retina is 2D. The lens projects a flat image of the 3D scene onto this retina. However, finding correspondences between two such images taken a short distance apart, allows to reconstruct the third dimension. This principle is called stereoscopy and the actual calculation of the depths is called triangulation (see Figure 2). Luckily we don't need to bother about those calculations: they are hard-wired into our visual cortex through a combination of evolution and a learning phase when we were infants.

The catch is that the whole 3D hype is not about real ‘3D’ but about stereoscopy. The main idea is not to reproduce real 3D shapes, but to reproduce the two slightly differing images to the spectators' left and right eyes, such that we get the illusion of looking at real 3D. Unfortunately that is only the theory. In practice there are many problems with this.

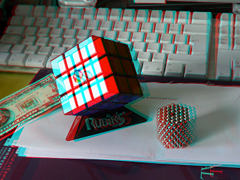

The first practical systems to reproduce stereoscopic images used either colour filters to separate the left and right images, or constructions with lenses, e.g. the View-Master. The most well known method uses red and cyan (or blue) colour filters. Such an image where stereo information is encoded in a single 2D image is called an anaglyph (Figure 3). Basically, whenever you're looking at ‘3D’ cinema through 3D glasses, you are looking at an animated anaglyph. The only difference between the old red/cyan method and the current systems is that more advanced ways are employed to embed the two images into one and separating them again, providing better colour reproduction.

Stereoscopy Costs a Lot

The costs for creating a stereoscopic film are obviously higher than for a 2D film. The best way is to really film everything in stereo as was done for the live action parts in Avatar. In principle, two normal 2D cameras could be put side-to-side to create a stereo pair but this is problematic in many ways. The only real option is to invest in a set of new stereoscopic cameras. Regardless of how it is done, it is expensive. Moreover, some simple cinematographic tricks that are possible with a 2D camera become impossible when filming in 3D. This means those otherwise cheap tricks need to be replaced by expensive CGI.

Only for computer-generated 3D animations the additional cost is small. Because the entire movie is basically a 3D model, getting stereo views from it is trivial. It only takes twice the rendering time and a little effort to properly set up stereoscopic views. Stereoscopic computer-animated films will probably keep on being released even when the 3D hype has died, just because they are almost as cheap to make as their 2D counterparts.

The alternative to live 3D filming is to film in 2D and do a stereo conversion afterwards. This is also a lot of work, and the quality of the result will depend on how much work, i.e. how much money has been spent on it. Many 3D conversions are a quick hack job and the results are poor. And even the best possible conversion will still be worse than material filmed in stereo.

The extra cost doesn't end there of course. The cinema needs to either buy a new 3D projector or upgrade their existing projectors. The latter is cheaper but the result is also cheaper. The big problem with each and every technology for projecting stereoscopic images, is that it needs to do with the light output of the single projector lamp to project two distinct images. The inevitable end result is that — even in the most ideal case — each eye only gets half of the projector lamp's intensity compared to a plain 2D projection (Figure 4). In reality it is even worse: because the image splitting systems and the filters are not ideal in neither the projector nor the glasses, each eye will get an intensity that can be as low as 7% of what it would see in a plain 2D projection (reference: this PDF fetched from the BARCO website in March 2011). This is actually the only difference between a converted projector and one that is specifically and properly designed for 3D: the latter should have a much higher light output to compensate for the loss of intensity. Needless to say this again increases the cost, which is why many venues will resort to a lower-intensity projector with too dark a 3D image as a result.

And finally, there is a cost to the spectator. The most obvious cost is the rise in ticket price, which is necessary to cover all of the above costs. But there is also the physical cost of having to wear 3D glasses for the whole duration of the film, and this is not to be underestimated. Regardless of how they are designed, 3D glasses are a nuisance. The mass-produced glasses for cinema use are often equipped with the smallest possible lenses to minimise material costs, with as consequence that the spectators need to keep their heads in exactly the right position to get a full image in both eyes. Moreover, the glasses are not comfortable, especially not the heavier and bulkier re-usable ones. After every 3D film I watched, I was relieved to finally take them off my aching nose. Plus, for people who already wear glasses they're especially annoying.

Stereoscopy Brings Little

You can do a simple experiment to see how important stereo vision really is in your daily life, and act like a pirate while doing it, so it's a double win. Tape a patch over one of your eyes or demolish some old (sun)glasses by removing one glass and covering the other one, and wear this contraption. Now walk around in your house and try to do some common things. You will notice that you will have surprisingly few problems and you will probably not notice your lack of stereo vision for the majority of the time, except for a feeling that there is something wrong due to one of your eyes being disabled. The only times where you'll really have problems is when trying to grasp objects like door handles, cutlery, or objects thrown at you. This is obvious because the main task of stereoscopy is to provide an accurate measure of depth.

Now tell me, how often do you have the need or even urge to grasp objects while watching a film? You don't. Watching a film is a passive experience, and that is not a bad thing. People go to a cinema or sit behind their TV to relax and do absolutely nothing during 90 to 120 minutes. The main reason why makers of typical 3D films will resort to throwing objects at the spectators — preferably pies, glass shards and other sharp objects — is to remind them that they're watching a 3D film. Without those silly effects, people would soon forget that they're watching in 3D, just as you would soon forget that you're only using one eye during the above experiment, except for those few moments where you want to grab a door handle.

This does indicate that there is a use for 3D if interaction is involved. For instance in certain types of video games, 3D can really add to the experience and open up new game play elements. If you want to get a taste of this, fire up any game that has a good physics engine and that allows to manipulate objects, like Half-Life 2 or Oblivion. Try to build a stack of small objects like the one in Figure 5, and you'll get the same problem as in the experiment above. Due to lack of stereo vision you'll often drop the object in front of the stack or behind it, and you'll have to circle around the stack to check if it is straight. Fortunately, building accurate towers of cups and bowls is not a required skill in either of those games, but bringing stereo vision to 3D games could open up a whole new set of possibilities or at least improve the immersion of the games.

The reason why we humans can still function without stereo vision is simply because it is only one of the many cues that our brains use to obtain 3D information from vision. There is perspective, lighting, focus and parallax that can all be exploited with a single eye (Figure 6). The importance of stereo vision decreases as soon as you are moving around. It also decreases with increasing distance from the object you're looking at. When looking at a distant mountain, it actually looks flat. There is not enough disparity between its projection in your left and right eyes to obtain any depth from stereo. This is why any film marketed as ‘3D’ will shove things in your eyes just to make sure you have seen that it really is 3D.

Cost vs. Reward

There is a common observation when it comes down to technological advancements of pretty much any kind: the cost-versus-reward curve gets increasingly flat with increasing cost: diminishing returns. Eventually, the slope of the curve becomes so small that to get any appreciable increase in reward, the increase in cost becomes outrageously large. For many technologies related to entertainment, we're getting close to that point or are beyond it. Audio is a nice example: for most people, the 16-bit 44.1kHz sampling rate of the CD was perfectly sufficient. In fact, most people are perfectly happy with MP3, which from a purely objective point of view is much worse in quality. Most people do not even seem to notice or care that recent albums are often mastered much more poorly than a few decades ago. Yet nowadays companies are trying to push consumer audio products at 24-bit 96kHz or even more. This allows to faithfully reproduce a dynamic range between perfect silence and permanent hearing damage, and a frequency range that is only useful for dogs and bats. For mixing and processing audio this seemingly insanely high resolution is useful, but for a consumer end-product it is not. In fact, these higher specifications could make it impossible to manufacture something satisfactory for the same low price for which a good system with lower specifications could have been manufactured. The same goes for megapixels in cameras: when the size of the CCD sensor remains fixed, increasing the pixel count implies making the pixels smaller, which increases noise. A 10MPixel camera I bought in 2009 took worse (noisier) pictures than a much older 4MPixel camera from 2004, even though these cameras had the same form factor and cost pretty much the same. In this case not only did the curve flatten, it even went downward again.

This same flattening cost/reward curve applies to cinema, and 3D is near the point where the extra cost is barely worth the reward (Figure 7). The first advances in cinematography were sound, increased frame rate and increased film resolution. Then came colour, which was a pretty large step upward, even though for some films colour is already non-essential. Then came stereo audio and eventually surround, which again is redundant for quite a few film genres. The latest advances are again higher resolutions even for home cinema systems, and now of course 3D. Concerning resolution, 1080p is high enough in the vast majority of domestic circumstances to project an image that matches or exceeds the highest resolution a human eye can resolve. There is no doubt that a lot of resources and energy will be wasted to produce even higher resolutions and push them to people's living rooms such that they could walk up to the screen and see individual hairs in an actor's scalp, something everyone badly wants to do all the time of course. Or perhaps people will want to be able to do the ‘enhance’ thing from Blade Runner on each frame of every film. I don't think so.

For some reason a more useful improvement in cinematography has not yet been implemented, being a further increase in frame rate. We've been stuck with 24 frames per second (FPS) since ages. Some people will tell you that 24 FPS is enough because our eyes work at that rate. That is a gross oversimplification. Our eyes do not work like a camera that records frames at a fixed rate. The speed of our visual receptors depends on their location on the retina and the intensity of incoming light. The human eye can discern visual frequencies up to 60 Hz under certain circumstances, which is why old 60 Hz CRT monitors were often headache-inducing. Yet, under average circumstances the limit is about 30 Hz, but that does not mean 30 FPS is enough. Nyquist's theorem dictates that with 24 samples per second, the highest reproducible frequency is a measly 12 Hz. To reproduce 30 Hz, 60 FPS is required. This is why serious computer gamers are not content with anything less than 60 FPS in an action-based game. Granted, there is little extra visual information in 60 FPS footage compared to 24 FPS, but the image does look a whole lot smoother, especially during fast camera panning movements and frantic action scenes.

It is possible to automatically interpolate frames to boost the frame rate of existing material, and many current TV sets already do this. But the algorithms are inevitably unable to realistically interpolate frames when the camera or complicated objects in the film are moving very fast, because then there is simply too much information missing to reconstruct the intermediate frames. Ironically, those are the moments when extra frames are the most desired. In other words, those algorithms will fail exactly when a higher frame rate is the most useful. The only remedy is to record the material at a higher frame rate.

Again, a higher FPS will not benefit all kinds of films but for many films it will be much more useful than 3D and it requires absolutely no extra effort from the spectator! No annoying glasses, no loss of intensity, no focusing problems. The horribly annoying ‘shaky cam’ fad from around 2010 might have been less irritating if films (or at least those parts(1)) were recorded at 60FPS because at 24FPS the image just becomes one huge smear or slide show when the camera is shaking. It will still be annoying and nauseating at 60FPS, but at least we'd be able to see what is actually happening. I suspect that the movie industry is actually planning to up the framerate but perhaps they will want to keep this trick up their sleeve(2) for when the 3D hype wanes off. They'll probably call it “hi-motion” or whatever, and present it as the next big revolution even though camcorders have long been able to record at 60FPS and digital 3D projectors are already capable of projecting at high frame rates.

Figure 8 shows the data points from Figure 7 plus a few extra on a different set of axes. The vertical axis shows roughly to what degree a technological advance improves the viewer's experience, while the horizontal axis measures how much physical and mental effort in total it roughly requires from the spectator. This includes the effort of suppressing nuisances causes by the technology. The ±12 FPS

point represents primitive cinema, which required considerable effort in the sense that the material was often blurry with poor lighting and of low frame rate or incorrect playback speed. But, it was a huge step-up from still photographs. Compared to this, the 24 FPS cinema that became the standard still requires some effort in the sense that the framerate is too low to capture high motion.

The whole point of this graph is to show that pretty much every advance since then, like colour or surround sound, comes at near zero extra effort from the spectator. Even if such advance does not bring much, it still does not demand any extra effort from the viewer. For instance someone who is fully colour blind, experiences no adverse effects from watching a colour rendition of a film instead of a greyscale rendition. Likewise, projecting a 2160p UHD film in situations where it is impossible to see any improvement over 1080p, has no adverse effects on the spectators. No matter how little the colour or the higher resolution adds, from the spectator's perspective there are no side effects nor extra requirements to look at it. Stereoscopic cinema or ‘3D’ on the other hand also does not bring a whole lot, but it comes at a high cost.

Many Films Do Not Benefit from 3D

Yet, even given the fact that 3D is far from essential in a passive experience like a film, it still can add to the feeling of immersion. Avatar is a great example of this and unfortunately it is also only one of the few examples of where 3D really is worth the cost and effort. The lush jungle environments and floating islands look good on their own, but viewing them in 3D really makes you ‘be there’. However, there are many, many movies where the visual aspect is mostly subordinate to other things like the story (yes, some people actually watch films for more than just fancy visuals).

Actually there are only a few genres where 3D is likely to add to the experience: sports, action films, documentaries, horror films, and last but not least: erotic films (not to use the more popular four-letter term). In comedies, 3D is exclusively used to throw pies and other objects at your eyes, and any film that has to resort to such tactics to be funny isn't worth watching anyway. Even young kids will yawn at the fifth movie that throws a pie in their face. In dramas or thrillers, 3D is of little use. Nobody cares that person A really is standing slightly nearer to the camera than person B, which would also be immediately obvious once the camera or one of those persons starts moving in the 2D image. Sports broadcasts might be the only area where 3D can really add a useful third dimension, so there might be a future for 3D television for sports enthusiasts. But really, the biggest drive for 3D cinema may well be that special ‘entertainment’ industry, which may also cause many people to want to distance themselves from ‘3D’ in the long term.

While watching ‘Tron Legacy’ in 3D, the only time where I really appreciated the 3D was in a scene with a see-through energy field in front of the actors, which would only be obvious in a 2D projection if the camera would move constantly. That was something like thirty seconds of the entire film. The rest of the two-hour runtime I was only annoyed by the doubling of the image when I couldn't properly focus my eyes, and by the bulky 3D glasses that never gave me a complete view of the screen, made the already dark images even darker, and caused my eyes, nose and ears to ache at the end of the film. I was happy that the cinema sent me a survey afterwards where I could indicate that I would certainly watch the 2D version of a 3D film if it were available.

In fact I have not yet met anyone whose appreciation of 3D effects in films was more than lukewarm. Most of the people who can see stereographic cinema at all, have the same remarks as in this article: the 3D is barely noticeable except in scenes that were inserted with the sole purpose of poking stuff in your eyes, 3D glasses are annoying, and focusing problems cause eye strain and/or headaches. This is in stark contrast with everything I read and see in the media, which wants to make us believe that 3D is perfect and will soon make 2D photography obsolete, what an utter joke.

Many People Do Not Benefit from 3D

It is pretty obvious that anyone with a condition that makes stereo vision impossible has no benefit from a ‘3D’ movie. They will however still need to wear the glasses or they'll see artefacts in the image. They get all the nuisances of 3D without any of the benefits! Even a cyclops would need to wear a ‘monocle’ containing either the left or right filter of regular 3D glasses. Yet, even people with perfect stereo vision may also have problems with stereo cinematography because it has many inherent issues.

First, there is depth-of-field, a problem that occurs with any stereoscopic imagery but especially with films converted from 2D. When a camera or an eye focuses on an object, only parts in the scene at the depth of that object will be in focus, i.e. look sharp. In the photo of the mantis for example (Figure 9), both the background and the forelegs are out-of-focus because they are at too different a depth from the body on which the camera had focused. Depending on the aperture of the camera, this problem will not always be noticeable. A narrow-aperture camera that focuses somewhere in the average depth of the scene will produce a sharp image pretty much everywhere. But even with a specialised 3D camera, a wide aperture will be unavoidable when filming in dark environments, and stuff in the background or in front of the actors will look blurry. The human eye itself also ‘suffers’ from depth-of-field but no human notices this because the eye always focuses onto whatever one is looking at. Not so with 3D cinema: nobody can prevent the spectator from looking at something else than what the camera was focused on.

In a 2D film, the fact that some parts of the image are out of focus is not such a problem because the eyes know they are looking at a single flat surface with a changing image on it. However, in a 3D film there are two problems related to the phenomenon of depth-of-field.

First, due to the human eye's depth-of-field, the spectator's eyes must focus at the depth of the screen at all times to see the image sharply. However, the relation between focus and depth is strongly hard-coded in the visual system of most people. As soon as the eyes see something ‘coming near,’ they will have the reflex to focus nearby and the entire image will become blurry (Figure 10). Many people can ‘override’ this, but quite a few cannot—including me. I can cross my eyes on demand but they will automatically focus nearby. When I'm watching a cinema screen, keeping it in focus gets priority. This means I need to do an enormous conscious effort to bring my eyes in an alignment as in the right part of Figure 10. The result is that I see every stupid “stuff in your face” effect as a lame doubling of the image. Yet, even for those people who can override their focus vs. alignment it still is fatiguing, which makes sense because it is not something one has to do in normal everyday life. This problem could be called the Vergence Accommodation Conflict (VAC) and there is no obvious technical solution for it (yet). The hard-coded focus versus convergence reflex makes perfect sense: there is no time to actively start focusing on something that is about to hit your eyes.

Second, due to the depth-of-field of the camera, parts in the image will be blurry. However, the eyes think they are looking at a real 3D shape due to the 3D effects. If a spectator decides to look at something in the background, it will appear blurry and the eyes will have the reflex to correct their focus, which is impossible because it will only make things more blurry. The result of these two issues is eye strain and often a splitting headache when the film ends. I don't know about you, but I do not go to a cinema and spend some of my heard-earned bucks to get a headache. A bottle of cheap whiskey costs the same and has the same effect.

The issue of camera depth-of-field can be avoided by using narrow-aperture cameras all the time. Of course, narrow-aperture means low light, hence noisy pictures in dark scenes unless the camera is very high-end or in other words expensive, again adding to the ticket price. Computer-generated films do not suffer from this problem because everything is always in focus. An extra effort and extra processing are actually required to simulate the depth-of-field of a real camera. For an animated film specifically made for 3D, adding this artificial DoF is pointless and simply stupid.

Even with everything in focus however, the problem of the discrepancy between focusing on the distant cinema screen and objects appearing near is unavoidable. It can be mitigated by limiting the range of depth effects such that the offset between expected and virtual depth is never too large. Of course, that would reduce the overall ‘3D experience’ as well. The only way to really solve this problem for any anaglyph-based system, is to build some extremely fancy active guided focusing system into the 3D glasses. This system would keep the screen in focus even when the eyes try to focus in front or beyond it. That would be complicated, expensive, and it will make the glasses even heavier. It will also only work if the spectator tries to focus on the specific depth that the director intended, or if the glasses could detect at what depth the viewer is focusing. Otherwise it risks being as headache-inducing as two bottles of whiskey.

There is an additional source of blur during scenes where the camera slowly pans (from left to right for instance), if the image is projected through any time-based left/right multiplexing system like for instance the spinning filter wheel from Figure 4. In practice this means: most systems currently in use. The root cause lies within the fact that such projection system displays the left and right streams with a slight delay between them. The delay is equal to the inverse of the framerate at which the projector works, which is also the speed at which the filter wheel or shutter alternates between left and right (for a system working at 120 frames/second this means a delay of 8.3 mSec). To the spectator's eyes which smoothly track the panning movement as a whole, one of the streams (e.g. the left) appears to arrive slightly early and the other stream slightly late, as illustrated in Figure 11. This causes an offset in the positions of objects seen on the screen, that manifests itself as an apparent motion blur that gets worse with faster panning movement and slower alternation between the left and right eye streams. Although in theory this problem could be counteracted by recording the material with an inverse delay between left and right, in practice it would only work for projection systems that have that exact delay. Mind that although a similar problem occurs with plain 2D projection where the same frame is re-projected multiple times (typically two or three times), it is less bad in that case because there is no delay between the left and right eyes.

Stereoscopy is Not the Same as 3D

Another related problem and perhaps the biggest issue with stereoscopic imagery altogether, is that there is a fundamental difference between being fed stereoscopic images and looking at things while walking around in a real 3D world. In the latter case the viewer has full control, and while looking at objects, their eyes will execute a graceful ballet of simultaneously rotating the eyeballs, adjusting the angle between them, and the focus of their lenses. All these actions are the result of complicated processing that takes into account the estimated lay-out of the scene, recognised objects, and expectations based on partial information already gathered. Looking at things in a 3D world is not a passive process, it is very active even though you may not experience it as such.

However, in a stereoscopic film the disparity and focus aspects are forced upon you. Instead of proactively changing the focus and disparity angle of your eyes, you need to keep on focusing on the fixed-distance screen and figure out the correct disparity each time the image changes substantially. In other words, instead of anticipating, your eyes will lag behind the optimal settings for the image. Moreover, due to the focusing problem discussed above, your eyes need to venture into focus/disparity combinations that never occur in the real world. A good director will know this and will give cues to the viewer as to where their attention should go, and never cause sudden unexpected changes in order to give your eyes time to get aligned with the image. Alas, such directors are scarce. Most of them will want to show off their 3D technology without knowing anything about stereoscopy and do things that will hurt your eyes. But watching even the most optimally executed 3D film will still be more fatiguing than if you would have watched the exact same stuff in the real world. Again, in an interactive application like a video game, this issue is less severe because the players mostly have good control over their character's movement and what they are looking at, although the focus/disparity issue always remains.

Even when ignoring all those issues, the fact remains that only under ideal circumstances your eyes will see the same pair of stereo images as when you would have looked at the actual 3D scene being filmed. You would need to look at the cinema screen at perfectly perpendicular angles, at such a distance that you see the image at exactly the right size, and with your head perfectly vertical.

All practical “3D” technology in use today is in fact nothing but a hack of the human visual system. It somehow shows different images to the left and right eyes and assumes that the brain will do the rest, but in reality there is more to depth perception than just differences in image projections on the left and right retinas. It is for this very reason that one should not expose infants to stereoscopic images or films, because their visual system hasn't fully developed yet. Being exposed too often to the unnatural and often physically impossible stimuli offered by stereo images risks wiring their brains in such a way that they will have problems looking at things in the real world.

2D to 3D Conversion Sucks

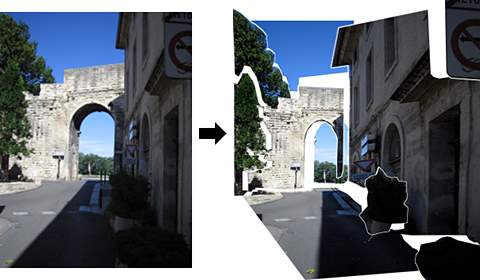

The process behind converting a 2D film to 3D is akin to creating a paper pop-up book. The scene needs to be cut into parts at different depths (Figure 12). Then two projections of this ‘inflated’ view can be made, corresponding to what the left and right eyes should see (often, the original image is taken as either the left or right, such that only one additional image needs to be constructed). The problem is that even though the actual process involves digital processing instead of scissors and glue, it is in essence still only a 3D pop-up constructed from planar cut-outs with as result that a lot of things (e.g., the trees in Figure 12) still look flat because it is simply too much work to cut out every little part.

A more advanced method that produces a more realistic result is to create 3D models for all (or the most important) objects in the scene and align them with the image. This is similar to creating a 3D animation, with as difference that the whole 2D image acts as the texture for the models. This method is of course incredibly labour-intensive which is why many conversions stick to the pop-up method. Even with the help of structure-from-motion software that automatically reconstructs 3D from 2D footage, a whole lot of work is required to clean up all the cases where this software fails or produces artefacts.

There is an additional problem with getting 3D from 2D, which is occlusion. Hold a small piece of paper near your face and focus on it. Then alternately close your left and right eye, and pay attention to the background without focusing on it. Your right eye sees things in the background that your left eye does not, and vice versa (Figure 13, also visible in Figure 12). When blowing up a 2D image to 3D, one only has information from what a single ‘eye’ saw during filming. There will be undefined areas in the 3D projections as soon as an object is in front of another object. This can be mitigated by keeping the disparity between the stereo images small, but that will reduce the 3D effect. The proper way to do it is to fill in those undefined areas with something plausible that stays consistent over different frames. With a bit of luck, parts from other frames can be used to fill in the areas correctly, otherwise a guess must be made. There is yet another problem with semi-transparent as well as shiny or reflective (‘specular’) surfaces: it does not suffice to clone and transform them because their appearance will differ between the two eyes' viewpoints. Their lighting or reflection should be altered to get a realistic effect. In other words, no matter how a 2D-to-3D conversion is done, it is an awful lot of work and prone to looking funky if not done right.

To make things worse, companies are trying to make systems that automatically inflate 2D images to 3D. As I just explained, it is already hard to get good results when a whole crew of skilled people spend months on just a few hours of footage of which they know what it should look like in 3D. Now imagine that a single image processing chip in your TV needs to do the same job for arbitrary video streams, at a speed of at least 24 frames per second with no noticeable delay. The inevitable outcome is that those systems will in many cases produce ‘3D’ that is wonky and unnatural and may actually look worse than the 2D original. It is really telling that people tend to see any benefits at all in looking at such fake and awkward-looking 3D impressions of 2D images. To me this is almost hard evidence that the trendiness of ‘3D’ has made some people so euphoric that their brains systematically kill every bit of common sense that contradicts the hype.

The bottom line is that it is possible to make a good 2D to 3D conversion, but there are so many pitfalls and limitations that the result is likely to be bad unless a whopping amount of effort is poured into it. It might be easier to just film the entire thing again with proper 3D cameras. For a 3D animation like Toy Story the process is much easier if all the original material had been stored. It suffices to re-render the graphics with two virtual cameras instead of one.

What About True 3D?

I believe the two major forces that will drive 3D back to its vampire coffin once its hype has waned off, are the the requirement to wear special glasses and the issue of not having control of what you're looking at. When the euphoria of the hype has gone, the realisation that 3D brings little to nothing for many types of films, will seep through to the general crowd. They will refuse to wear those stupid cumbersome glasses for those films and most likely also for the few films where 3D might be worth it.

There are some stereoscopic methods that do not require glasses, but they have other problems. All those methods are based on lenticular lenses or similar optical tricks to show a different image to the viewer's left and right eyes. For this to work, the viewer must be exactly in the right position. It is obvious that if you position your right eye at the location where your left eye was supposed to be, you'll see the wrong image. This gets worse if multiple spectators want to watch the same screen because the number of ‘sweet spots’ to get correct stereo is limited. The positioning problem can be solved by tracking the spectator's eyes and actively steering the lenses to ensure each eye always sees the correct image, but then the whole set-up will only work for a single spectator. (See the SRD paragraph for more info.)

Last but not least, these systems are still plain stereoscopic methods and therefore also suffer from the problems of focus discrepancy and having no control.

Ideally a 3D projection system should not just show stereoscopic images but project a true 3D shape. This means that anyone looking at those shapes from whatever direction will see a real and correct stereo 3D image, and they'll be free to look and focus at whatever they want. Even people with only one working eye would see real 3D by moving their head and from the fact that their eye is focusing on different depths. Unfortunately there are also fundamental problems with this whole idea:

- First, if such a film would be projected for a large audience, the people at the left of the room will have a considerably different view of the scene than the people at the right. Basically, it would be the same as watching a real theatre or opera. Only the people sitting in the middle would really see what the director intended. If the director would take this into account, there will be severe limitations on what kinds of scenes can be filmed to offer everyone an acceptable view. Anything with narrow corridors, small rooms, or tall objects near the actors and not entirely behind them will be a no-go.

- Because the projection produces real 3D shapes, the size of what can be projected will be constrained by the ‘projection volume,’ the 3D equivalent of a 2D screen. Suppose you'd want to project a true 3D view of the Grand Canyon, tough luck. You could project a smaller version closer to the viewer but it will only exacerbate the large audience issue and it will really look like a miniature Grand Canyon. To get any sensation of distance, the 3D projection volume would need to be immense, something the size of a soccer stadium might be the lower limit.

- Projecting true 3D is plain insanely difficult. There are already some systems that can project small images. However, those systems are basically giant blenders fitted with mirrors and lasers. If that sounds scary, that's because it is. The lasers project images on the spinning mirrors in such a way that the beams reflected in a certain direction correspond to the image viewed from that direction. To make this flicker-free, the machine must spin at speeds that require the mirrors to be fitted very securely, otherwise the rig could turn into a glass-throwing bomb at any time. For this reason those systems are always encased in a sturdy box of Lexan or similar. Needless to say that there are some huge issues for upscaling such a system to usable sizes for projecting a movie.

- Suppose someone would find a way to get rid of the spinning mirrors of death and really project light into a transparent volume, then there would still be the problem of occlusion. If you'd be able to make one point (a voxel, the 3D equivalent of a 2D pixel) in a huge cube of perfectly transparent material light up in any colour, then everyone will see that dot of light from every direction. This means only transparent shapes like jellyfish can be projected. If you'd project an image of a person standing behind a car, the person will be visible through the car. To avoid this, there are two approaches: either voxels in the projection volume should also be able to absorb light on command, not a small feat; or each projecting point should be able to project a different beam of light in different directions, again not a small feat. To avoid that an inactive point blocks the light of an active point behind it, it must either be transparent or incredibly small.

To recapitulate, the ideal true 3D projection system would be a soccer stadium filled with some mystery material in which a mind-boggling number of perfectly transparent particles can each either emit different beams of light in different directions, or be toggled between a state of either emitting any colour or absorbing any amount of light, at a rate of at least 24 states per second. Oh yeah, that is really going to happen. Even if it could be theoretically possible, a practical implementation would probably require the equivalent of a dedicated nuclear power plant to run. Pushing all the data around would on its own take an insane effort. And even if this could all work out, you'd still have a lousy view of whatever is being projected if you were too late to buy a premium seat.

A slightly more viable alternative that still produces the illusion of real 3D shapes without requiring a huge 3D projection volume or glasses are holograms. There is ongoing research in moving holograms, but they're still light years away from producing a cinema-sized image at an acceptable frame rate. Even if one would ever get there, there is still the problem that holograms have very poor colour reproduction, require the correct kind of lighting, and can only be viewed properly within a limited viewing range. It would be cool and potentially useful for projecting small objects or images of space princesses in distress, but pretty much unusable for a feature film.

Update 2024: Sony's SRD

This is a new introduction in the market of stereoscopic display solutions that warrants its own paragraph. In full, SRD stands for Spatial Reality Display. Sony's system is an evolution of the older fixed lenticular displays like the one from Philips, where tiny lenses redirected the left and right eye images towards certain viewing angles relative to the screen. The new trick the SRD has up its sleeve, is that each pixel now has its own lens that can be actively steered. An eye-tracking camera determines where the spectator's eyes are relative to the screen, and adjusts the lenses in real-time to ensure each eye sees the correct image at all times. It is an amazing technological feat.

It does not need to end there: because the spectator's 3D position can also be obtained this way, the 3D shapes can be adjusted accordingly, to create an illusion of a true 3D scene that can be viewed from a wide range of angles.

As far as planar ‘3D’ displays go, the SRD is the most convincing I have seen so far, especially when actively rendering a 3D scene and adjusting it to match the spectator's position. You really get the illusion of looking at real 3D objects. No glasses are required, which is a big advantage.

The system does have disadvantages however.

- The most obvious problem is that it only works for a single spectator. The micro-lenses can only be optimised for 1 pair of eyes. If someone else watches the screen at the same time, they will see weird things. There is no such thing as graceful degradation here; either a single spectator sees the correct image, or 2 or more spectators see junk if the eye tracker starts dithering between their eye locations or if it would attempt to aim for some kind of average.

- And of course, it still suffers from the focus discrepancy, a.k.a. vergence accommodation conflict. The spectator needs to focus on the surface of the screen, not the actual expected 3D depth. In the demos I have seen, the problem was not that bad, but I did notice fatigue especially when watching the screen from a close distance.

The SRD will likely be the most suited for demonstrations where one person can interact with for instance a product 3D model, for CAD design, arcade-style games, or medical applications, but don't expect it to conquer cinemas (big venues or at home).

The Bottom Line

‘3D’ is one of those recurring hypes that occasionally pop up because most of the people who lived through the previous hype forgot why exactly it died. The reasons are simple: 3D is far from essential and only substantially adds to the movie experience for a small subset of films, and only if it is done right. And doing it right

is difficult. On the other hand, it is expensive to create and project, and therefore also more expensive at the ticket office. It requires the spectator to wear annoying glasses that inevitably halve the light intensity, therefore 3D films look dark on converted existing projectors. Methods that do not require glasses have other problems. Moreover, having stereoscopic images forced upon you will always be more fatiguing than watching real 3D shapes. It is not the same as watching things in a real 3D world.

That is a whole lot of extra cost for only a small benefit. Really, what sets ‘3D’ apart from any other advance in cinematography like colour or surround sound, is that it requires a significant extra effort from the spectator while all those other things do not. To top it off, for a considerable number of people there is no benefit at all, for some there is only added nuisance. If you are doubting whether to buy a 3D TV or instead spend the extra cash on a larger higher-quality 2D TV, I'd personally go for the latter. Only if you plan to do some 3D gaming, it might be worthwhile to invest in 3D equipment. As soon as interaction is involved, 3D is much more rewarding than in a passive experience like a film.

The 2009 hype seemed to be stronger and longer-lived than its predecessors, probably due to the facts that the technology is more advanced and the movie industry kept pouring a lot of effort and money into this hype to keep it alive. But even that will not prevent the hard truths from dawning upon the general public in due time. It is silly to expect every film to be filmed or converted to 3D, and it is even sillier to expect 2D photography and cinematography to be entirely replaced by 3D. The more films try to be ‘3D,’ the quicker the public will get sick of it.

I am not saying that 3D cinema or television is completely useless and should be abandoned and buried. There are cases where it really beats 2D, like sports broadcasts (no more ambiguity where the ball is exactly) or in medical contexts. I believe when it comes to entertainment, 3D is best consumed in small portions. It always delivers its biggest ‘wow’ effect when you haven't seen it for a long time. The correct approach to keep ‘3D’ alive would be to keep it a niche market as it should be, only producing a spectacular, well-made 3D film like Avatar perhaps every odd year, with possibly some shorter features in between. These should be projected in a few specialized theatres with 3D-optimized equipment, not in half-assed-hackjob converted venues. I am certainly willing to occasionally put up with all the nuisances of 3D cinema if it is worth it, but not every time I go to the movies just to see thirty seconds of worthwhile effects and ten stupid scenes that were only inserted to remind me I am watching a 3D film that didn't really need 3D in the first place.

It is not a bad thing that perfect 3D cinema will always stay out of reach. That makes it much more exciting every time it stirs up yet another doomed revival.

(1): There is a ‘myth’ stating that one of the motivations behind the 24 FPS standard is that this framerate has a certain soothing effect on the spectator. This may be more than just a myth but I can't find any hard facts about it. Nevertheless, I am inclined to believe there is something to it. When watching films on a TV set that interpolates frames to double the framerate, I find there is something uncomfortable about scenes that are supposed to be relaxing. The films have lost their ‘cinema experience’ and I doubt it is due to imperfections of the interpolation algorithms. I believe Douglas Trumbull is entirely right when he claims there is a benefit to varying the framerate depending on what effect is desired within certain parts of the film, for instance 24 FPS for quiet scenes and 60 FPS for action. The funny thing is that with TV sets that ‘inflate’ the framerate, it is exactly the inverse because the interpolation algorithms only work well on quiet scenes.

(2): I wrote this part around the end of 2010. Now, halfway 2012, it seems my prediction has some merit: “The Hobbit” is filmed at a high frame rate (48 FPS). Advanced screenings have proven this to look awkward to many people. As explained in footnote 1, I believe it would make more sense to vary the frame rate: stick with the classic 24 FPS for scenes that should have a more ‘relaxed’ cinematic feel, and throttle up to 48 FPS for fast-paced or action sequences.

Go to the blog post that announces this article if you would like to comment.