A Plea to Preserve Meaningful Referrer Headers

Google is pushing a new stricter default for the Referrer-Policy header under the veil of privacy. Not only have they implemented this default in Chrome, they are also lobbying for everyone to configure it in their web servers. Is privacy truly the motivation of this company that got rich on dubious respect for the privacy of their clients?

This article is a plea not to blindly follow suit by nailing shut the referrer header in every possible case, because that may do more harm than good.

What is the Referrer-Policy Header?

The HTTP protocol has a certain header with the annoying “HTTP_Referer” name. Yes, that is indeed misspelled and should be “referrer,” but this just happens to be how it wormed its way into the standard. When arriving on a webpage through a hyperlink, this header used to contain the full URL of the page the visitor came from. With the internet evolving from a mere collection of informational webpages towards more interactive applications where personal information could be derived from what URL visitors came from, this behaviour became undesired in certain situations. Enter the Referrer-Policy header.

The Referrer-Policy header specifies to browser applications what value the referrer header may have in what situations. For instance the referrer could be limited to only the originating website's domain when the visitor follows a link that leads to another domain, or it could be left entirely blank. This offers website designers much more control over what kind of information could be leaked towards other websites when visitors follow a hyperlink. If for instance someone is as incompetent as to design their website such that usernames and passwords are being directly used in URLs, they could hide their incompetence by leaving the referrer header blank. Yeah, you can probably already feel where this article is heading. But let's first continue with sketching the context.

A New Default

Until around 2018, the universally accepted default value for Referrer-Policy was “no-referrer-when-downgrade.” This states that the full URL is allowed in the referrer header, unless a link on a secure HTTPS webpage leads to an insecure HTTP webpage, in that case the referrer is entirely omitted. A major motivation for this former default was probably that any website served over HTTPS was more likely to be of some private nature, while plain HTTP websites were deemed the scum of the earth that were out to steal private information. In a certain way this has indeed become an outdated idea, because there has been a general drive to make every website be served over HTTPS, to ensure that certain exploits and attacks like eavesdropping or code injection are no longer possible. This has lead to even the scummiest websites now also being served over HTTPS. Thanks to companies like Google who were one of the main driving forces behind this, when you are now being scammed, at least it happens securely.

Then came a gradual push for a new Referrer-Policy default: “strict-origin-when-cross-origin.” This means that links within the same domain will still have a complete referrer, but when the visitor follows a link leading to a page on another domain, the referrer will be truncated to the originating domain only. For instance if one would click a link on webpage at:

www.foo.com/somepage.html

that leads to:

www.bar.com/otherpage.html,

then the person maintaining the www.bar.com webserver would only find “www.foo.com” in their webserver logs, instead of the full URL.

Not only is Google making this the default in their Chrome browser if a website does not provide the header by itself, they are also promoting this new default as the generally preferred header value on their web.dev website. Isn't it a bit weird that exactly this company is now the primary driving force behind this new default?

Questionable Motivations

As far as I remember, the aforementioned reasoning about the rise of HTTPS was not the primary reason for this new default. No, in the tradition of typical human nature, it was a response to some kind of near-disaster. Right before the push for this new default started, there had been major news stories about certain healthcare websites leaking personal information through for instance the HTTP_Referer header when users clicked ads. Yes, healthcare websites carrying personal information were for some reason serving ads hosted by potentially malicious actors or providing other types of hyperlinks to untrustworthy outside websites, but let's just ignore this worrying fact for a moment. There were also websites that merely relied on deep URL paths to serve content not supposed to be viewed by everyone—in other words, security through obscurity.

A stricter Referrer-Policy header can fix the problem in both the above cases, but anyone with half a brain can see that both scenarios are manifestations of utterly poor website design and blatant incompetence. Even in an intranet website no sane person would transfer patient data through URLs, that's just stupid design. Putting usernames and passwords in URLs is beyond stupid. Anyone doing that should not be allowed to write software, neither should anyone treating security through obscurity as their core security model.

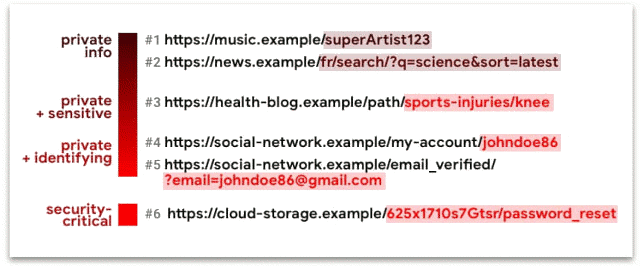

Yet, on the aforementioned web.dev page, we get exactly such examples, as shown in this image taken from the page on 2021/02/22 (licensed under Creative Commons Attribution 4.0 License).

Apparently we now live in a world where it is already frowned upon if one is interested in what music someone else listens to, or might figure out that this someone reads scientific news articles written in French. I'm having a hard time imagining scenarios where such information can be abused. “You read about science in French!” — “Sacrebleu! I'll give you $50 if you promise not to tell my friends!” Hmm, maybe not.

The health example is actually the only truly valid one in that entire illustration. The examples below it are borderline ridiculous. They may have been valid around the year 2000 when web technologies were not advanced enough to keep user names and mail addresses out of URLs, but this is the year 2021. There is no valid excuse to pass such information through URLs anymore, aside from utter incompetence.

The last example is a password reset URL. It also seems a legitimate example, until one wonders what kind of idiot website designer would allow a user to go straight from the securest of secure pages towards an untrustworthy external website, especially given that the only possible action on such page should be the clicking of a confirmation link or button. Moreover, a password reset token must be valid only once and for a very limited time. That makes this another example of utterly poor website design which should not even exist in the first place. This again raises the question why a company like Google resorts to examples like these to push a new default, instead of putting more emphasis on not being an idiot while designing websites.

Better Motivations

Granted, we cannot expect every webmaster to be competent and we cannot blame users for incompetence of website maintainers. Moreover, there are indeed cases where forms of personal information can seep towards other domains via the referrer and be exploited in some way, for instance the health example shown above, or someone's financial situation when they follow a link on a site about mortgages or something. Therefore from a user point-of-view I admit that it does make sense to use a stricter default for the Referrer-Policy header when a website does not specify it—which in a sense could be a sign of webmaster incompetence in itself.

There are also some very clear-cut cases where outside websites have absolutely no business with internal URL paths. Take certain web apps for instance, where everything is inherently personal and volatile, or the URL has no meaning at all. In such environments it often makes sense to leave the referrer entirely blank because no website owner will care much that someone posted a link to their site in something extremely volatile like a message on Slack or similar. I do not consider web apps to be true websites anyhow, even though in many cases they are built upon the same technology.

Just as a total sidenote, aren't you wondering why you need to download an ‘app’ for the most trivial things nowadays, things that can be perfectly served in a website? I absolutely hate this trend because it forces me to do everything on the annoyingly small screen of a stupid smartphone. It's quite simple. Most of those “apps” are still ordinary websites, only hidden behind a façade. Apps put users in a nicely controlled cage. You usually need to register to get access, which immediately gives the publisher of the app all your privacy on a plate, instead of having to guess and track it from your browsing behaviour on a regular website. The referrer header is absolute peanuts in comparison. Also, it is much harder or impossible to use an ad blocker on apps than on websites in a browser. You cannot block the ads which aren't just annoying, but now also know exactly in what context you view them. Oh, brave new world. But hey, I am digressing again.

Default ≠ Best Practice

If you maintain a website of some sort, which is not unlikely if you ended up on this page and made it as far as this paragraph, and it serves content of a truly public nature, then what I am now going to ask you is not to blindly slap this new default onto everything (or let a browser do it for you), but instead seriously consider sticking to the previous default of ‘no-referrer-when-downgrade’ by explicitly configuring it in your web server. I know I am fighting a losing battle here due to the forces involved when it comes to pushing ‘strict-origin-when-cross-origin’ as the new panacea, but at the least I can try.

Let me explain. Within the category of websites whose primary aim is to just disseminate information that might be useful to the general public without political or financial aims and that is not of a highly private nature, like the very website you are currently visiting, there is no justifiable reason to obscure the interactions between such websites. Before the effects of this new default became noticeable, I used to be able to see from visitor statistics what exact content on what other websites linked to what pages on my site. I could then look at that content and engage with it, e.g. join in the discussion, or look whether I couldn't improve my content to better match what people expect. Now with the HTTP_Referer header butchered like this, at best I only see some domain in my statistics and I don't care. No, I won't visit your domain and start excavating the whole site to find out where the link came from. I also won't waste my time and money on services that continuously crawl the entire internet to map all the links and then ask money for accessing this information. I will just ignore the domain in my statistics and assume my content is good enough as-is, even though past experiences have taught me it probably is not.

Having websites freely link and interact with each other was one of the core ideas behind the original Internet. Obscuring all these interactions for no good reason is kind of acting like an asshole. Yes, it increases “security,” but as everyone knows: security is the opposite of usability. If you want your computer to be 100% secure, shut it down and then grind it to dust. Just as with the increasing trend to add a ‘nofollow’ attribute to links for no good reason, this new policy mostly hurts small websites by increasing their isolation, and makes things harder for newcomers, further strengthening the existing monopolies. I doubt whether Tim-Berners-Lee would approve of this. Sad, sad times.

An Attempt at Sensible Guidelines

What could be good reasons to leave Referrer-Policy at its previous default value of

‘no-referrer-when-downgrade’? One could argue: any webpage that does not fall into one of the aforementioned categories, hence:

- not designed by incompetent idiots,

- not web apps, and

- not offering opportunities to somehow leak personal information that could be exploited.

Therefore if a webpage:

- does not serve content that is of such a nature that merely knowing someone viewed it gives away exploitable personal information about that visitor,

- only has links you control and you see no problems in the linked sites knowing where the link came from (if you do, then I do wonder why you are linking to them at all),

- contains no ads or analytics junk controlled by some company with a dubious moral compass,

then it is simple: there is no reason to hide the referrer. Even stronger, hiding the referrer in such case is only an act of shooting oneself in the foot, because you will prevent others from discovering from what kind of context you are linking to their website—if they can see the links coming from your domain at all. Visibility of your own website will be reduced.

Things get more complicated when the webpage does contain ads and analytics. I plead guilty: there are Google ads and analytics on this website. Yet still I left the header at ‘no-referrer-when-downgrade’ instead of giving in to the privacy Nazis. Why? Three reasons:

- I control all the links on this website except for the ones inside ads, and I believe anything I link to is entitled to know where exactly the link comes from.

- As for the ads or other sites the links point to, I do not believe this website has any content that when connected to a visitor's identity is exploitable in a bad way. If anyone believes otherwise, use the mail page and tell me, because I am really curious and willing to adjust my stance if provided with solid arguments.

- Any visitor who is strict about their privacy, will or at least should have installed an ad blocker anyway, and other privacy enhancements that enforce a Referrer-Policy of their own choice. I do not mind this at all, the ads are just an extra to cover a measly part of the costs of hosting this website. Yes, it costs real money to host a website outside the it's-free-if-you-sell-us-your-soul frameworks. Luckily some people are friendly enough to donate, which usually covers the remainder of the costs.

If the situation for your website is not that clear-cut, or you simply are more paranoid than I am, then you can resort to a whitelisting kind of approach. Use a strict Referrer-Policy default to ensure any links you have no control over won't leak referrers. Then, use more permissive values for individual pages that have no foreign ‘parasites’ attached to them, or individual links that you control and endorse. For pages, you can add a meta tag with name="referrer", and for individual links you can add a referrerpolicy attribute. Both accept the same values as the header.

Quite a few websites nowadays offer interactive content where users can post content including links, without any supervision. What to do in such cases? Perhaps you do not want each of those links to carry a complete referrer. It's a bit of a similar story as with the nofollow attribute on such links. If you have no means to validate every link that originates from your site and endorse it, then you probably don't want other websites to give an impression that you do. On the other hand, referrers pointing to specific forum posts were often some of the most useful ones I saw in visitor statistics, when it came to knowing what brought visitors to my site and whether I was providing useful content. (Mind how I absolutely hate having to use a past tense here.) Therefore on forum websites where members can be verified to be trustworthy, I would like to see the full referrer preserved on links created by such users. Of course this is not trivial to implement, but that does not mean nobody shouldn't try.

Wikipedia is a nice example of the above. They have also rolled out the dreaded stricter header value a while ago because there are scenarios where links attached to articles can incite others to manipulate the articles. It would be nice if they would limit this to only the type of articles where that scenario applies, but of course it is difficult to devise a system for making that kind of distinction. Therefore although it makes me sad, I can understand their decision.

Search Engine Analogy

As the above shows, there is no clear guideline as to how strict one should tweak Referrer-Policy headers, meta tags, or attributes. The lazy solution is like Google's: just nail everything shut. That is a poor solution which hurts smaller websites like this very one, so please don't be lazy. I am inclined to say everyone should follow their common sense, but then we're back at the competence thing… oh no. Something that may help, is to think about how your content should be treated by search engines. This is not a foolproof method but it does give a sensible starting point to decide how much referrer information to pass to outside sites.

- If you would never want to have a webpage show up in a search engine's search results, then that page should also never leak any referrer information, not even the origin. Of course it is not the referrer that determines whether it shows up in search engines, this must be controlled by other means. It's just that the motivations for these two decisions are very similar, which makes this a sensible guideline.

- If on the other hand you want your website to be findable in a search engine but the search results should not link to parts of the site deeper than only the homepage or maybe a few high-level pages, then

strict-origin-when-cross-originon the deeper pages probably is your friend. - If however you tend to aim for your webpages to show up prominently in search engines, then you should investigate whether there are no strong objections against sticking with

no-referrer-when-downgradeto maintain visibility besides search engines as well. An obvious objection is when the page contains information that is of a highly private nature when linked to the knowledge that a certain visitor has looked at it, for instance medical, financial, or political information.

Remember, if there is no good reason to obscure the referrer, then sabotaging it on your own site will probably harm your traffic without helping anyone else. And if your website has helpful content, then harming its traffic harms everyone in the long stretch.

Now Really, Why the New Default?

As shown in the above example given by Google, the companies promoting the new stricter header value as the new best practice, motivate it with supposedly improved privacy or security, the new buzzwords that are being abused by companies to push modifications to standards and laws only for their own benefit, not the customer's. The same company (Google, or should I say Alphabet these days) that got stinking rich by avidly tracking internet users, is now pushing a “security” measure supposedly to thwart such tracking, the irony is thick in this one. What follows is mostly speculation from my part, but the more I think about it, the more it makes sense.

One of the main motivations for this new default, is that a lot of webpages these days contain ads, and it are especially those ads that can leak referrers to outside websites that could be of dubious nature. Now, do you really believe blocking the HTTP_Referer header will make it that much harder to track visitors? There are countless other ways to do it, using unique identifiers and time-related information. Throw enough big data against it, and visitors can be tracked and identified purely from timestamps and known relations between websites. It does require more computing power, but that is not something Google is in short supply of, and they already have an extensive map of the entire internet thanks to their search engine.

Moreover, ads should already know what website and perhaps what exact page they are on, to be able to pass revenue to the website owner. Breaking the referrer header barely makes any difference if any at all.

Again, those who don't want to be profiled will have tweaked their browsers to block all things that could leak information, or will even browse through Tor, a randomising VPN, or something similar. This new default won't change anything for them. Maybe the average public believes such things are overkill and still has faith in this new default pushed forward by the same company that got rich by serving targeted ads. Oh, they probably have no hidden agenda at all. I bet those at Google who decided to change this default chuckled and thought: “haha, see how we fool them into believing we offer more privacy, while actually we make it harder for other companies with less resources to compete with our ad network, while we can just keep tracking using data mining! Double whammy!” Did someone say something about never becoming evil? Must have been my imagination…

What bothers me the most about the indiscriminate promoting of this new stricter value for Referrer-Policy, is not so much the fact that it reeks as if the company which is its biggest proponent, seems to have a hidden agenda of sabotaging their competitors who lack the budget to migrate to big data infrastructure, while they themselves can happily keep tracking visitors even without this header. That is a worrying fact because it makes the biggest companies even stronger in this dubious business of making money off gathering data about people without their consent. But, it is not the worst consequence of this new strategy.

No, what bothers me so much is that it is an act of carpet-bombing the entire Internet with a measure that protects visitors only in a very limited set of situations, while in many other situations it only harms everyone in the long run. First the smaller websites because this new default increases their isolation, then the visitors who get jailed into a limited ecosystem of mainstream websites because the smaller websites die off or are hampered in their means to improve upon their content.

I have this goal of preserving this very website as one of the apparently increasingly scarce islands of nostalgic friendly Internet as long as possible. I do not like this trend of the Web transitioning from what used to be a forum open to everyone, towards a collection of walled gardens, gated communities where everyone is utterly paranoid and sour, and expects to be exploited in every possible way if they don't sell their soul to a big tech company. For some reason the Internet seems to be regressing towards a bunch of isolated semi-private clubs as was the case in its earliest days or even before it existed. Why bother doing that bit of extra effort to use open protocols, if it is so much easier to install a proprietary app, or build a website from a template hosted by a big company that first requires you to sign a EULA that probably states they can do pretty much anything, but you wouldn't know because nobody reads those EULAs anyway? And to make things worse, all those walled gardens are owned by a very small number of big companies who have full control over all the communication within their gardens, again thanks to those EULAs. These same companies are now actively attempting to change the core protocols of the Internet for ther own benefit, and they are starting to succeed in this, ugh. Blasting the entire internet with this new default header only helps these companies to strengthen their monopolies and raise the walls around their gardens, making it even harder for small independent websites to thrive.

Conclusion

If you host a website of some kind, please do not lazily apply the strictest guidelines and consider it done. Whether it is better to set a strict default and make exceptions for specific cases, or set a lenient default and tighten in specific cases, depends on what kind of content you are usually serving.

When it comes to referrer headers, setting stricter policies than necessary could harm your traffic for no good reason. If your website contains public information that can do little or no harm when connected to a visitor's identity, it makes sense to use a default response header “Referrer-Policy: no-referrer-when-downgrade.” If on the other hand the content is of a highly personal nature, strict-origin-when-cross-origin should be the default. If even your domain name alone may already give away private information when connected to a visitor's identity, or your website is merely an app with only very volatile content, then a value of no-referrer probably makes the most sense.

You can override a site-wide Referrer-Policy default for specific pages with a META tag, for instance:

<meta name="referrer" content="no-referrer-when-downgrade">

You can also override it for specific links with an attribute on the link tag, for instance:

<a href="https://xyz.com/" referrerpolicy="strict-origin">

Both accept the same possible values as the Referrer-Policy HTTP header.

If you want a link to have no referrer at all, this can also be done by setting its rel attribute to "noreferrer". But again, please think twice before doing this.

Do not rely on any defaults that may or may not be set by a visitor's browser. You can never be sure what those defaults are and when they will change. Explicitly set your own default header in your webserver's configuration.

And as a final note: when you have control over links you create on some other site towards your own site, you can still easily detect when visits come through that exact link, even when the other site has totally blocked the referrer altogether. For instance, GitHub has now decided to totally block the referrer for the link one can add to one's own repositories. Seriously, I don't know why I am being denied the possibility to see which of my own managed repositories cause visits to my own site. But, the solution is simple: just extend each link with a unique ID in a query parameter, which will then act as a surrogate referrer, and then you can keep collecting statistics for how many visitors arrive on your site through each specific link.